ARD and Level 2 Data

Analysis ready Data – ARD

Planet, CEOS and the USGS have implemented the concept of Analysis Ready Data in slightly different ways. The different satellite platform and sensors make different adjustments necessary, but some corrections such as orthorectification and a new tiling structure are used by all providers.

While Planet also put a big focus on normalization of the reflectance values across sensors and atmospheric conditions, thus actually manipulating the values themselves, this approach seems less advanced with the USGS and CEOS. Their ARD seems to focus more on restructuring the image swath into a tile system and making this data accessible accompanied by manful metadata information. Also, Planet already includes information masks with the images, identifying clouds and other areas that should not be included in analysis. Planet and the USGS apparently plan to use their established hosting and provider solutions for the ARD data families swell, while CEOS interestingly offers to outsource hosting and processing to external data providers to paid partners.

In general, it seems that Planets approach reaches further than the other approaches, diving deeper into the images and reflectance values themselves as well as already having performed first preprocessing steps.

Since the creation of ARD takes away some freedoms from the user, it needs to be carefully deliberated which steps can and should be performed. Restructuring the tile grid or making changes to the backend hosting and providing solutions will most likely not upset anyone, but techniques that could alter the data or the information that could be retrieved from the images are more sensible. For understanding the desires of the user, it is necessary of identifying those preprocessing steps that the majority of the users perform. This eliminates a big workload for the user and thus lowers the barrier of entry to work with EO products. The delivery of the data is also of importance since it needs to be easy for users to find and access their relevant data while not being flooded with clouded images or tiles that only contain small strips of imagery.

ESA Sentinel-2 Level-2A Data

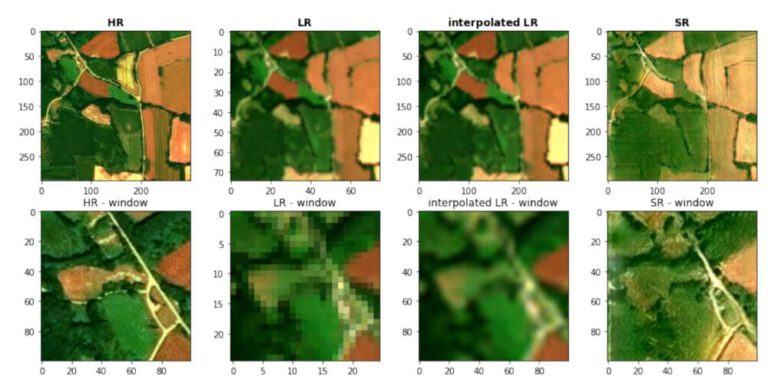

The Level-2A processing level describes a preprocessing level of satellite imagery from Sentinel 2 data, which provides the bottom of atmosphere reflectance values by using an ATCOR (Atmospheric and Topographic Correction) algorithm. This method uses reference areas to calibrate the ground reflectance as well as atmospheric parameters to normalize the data and make reflectance values useable across different acquisition dates and weather conditions.

Importantly, the L2A level automatically creates masks for the user to use. Different types of clouds (which alter the reflection values) are identified, as well as areas with missing or defective data, water bodies, vegetation, snow and cloud shadows. The identification of these areas is done by several index and threshold methods in order to allow the user to only use pixels that he or she believes to be of importance to the according process and of the correct classification of his or her interest.

The processor which performs all these tasks, namely the BOA calculations, ATCOR as well as the scene classification maps, is called Sen2Cor. The L2A products are available after certain processing time, which usually takes about 48 to 60 hours longer than the publication of Level-1C products.

This level of processing is operational since early 2018 and was planned to be fully available by the summer of 2018.

L2A data by Theia

The main difference between the L2A data produced by Theia and the ESA is the processor. Thea uses the MACCS (Multi-sensor Atmospheric Correction and Cloud Screening) processor instead of the Sen2Cor, meaning different methods of atmospheric correction are implemented, making use of other bands to identify clouds and water vapor as well as other indexes and thresholds. The goal of both methods is to provide bottom of atmosphere reflection values, but most importantly MACCS additionally uses multi-temporal and multi- sensor information. After masking could and other unwanted obstructions, information from other acquisition times is used to create a mosaic of the scene in question in order to fill the missing information of one image with the information of other images, which then can be used to find the difference in expected reflectance values of a certain region versus the actual reflectance of the image. Therefore, if the expected reflectance differs too much from the observed reflectance, it can be assumed that the area is obstructed by clouds, cloud shadows, fog, etc.

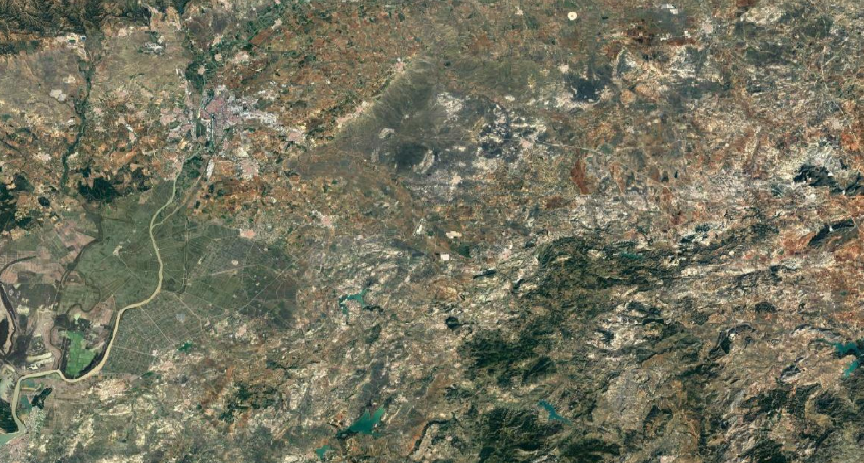

The availability of the data is limited to a fragmented area of the world, spanning most of western and Southern Europe Europe and selected areas in southern America, West Africa, the Indian Ocean as well as some regions in Asia and Oceania.

Interestingly, images from Sentinel, VENμS, LANDSAT and Trishna satellites are all taken into account. Obviously the ESA and other data providers with dedicated EO missions can not merge data from other providers and thusly obviously publish and use only their sensors and imagery, while Theia is able to combine all knowledge and information from multiple sources regardless of origin or the politics involved behind the scenes of the space industry.